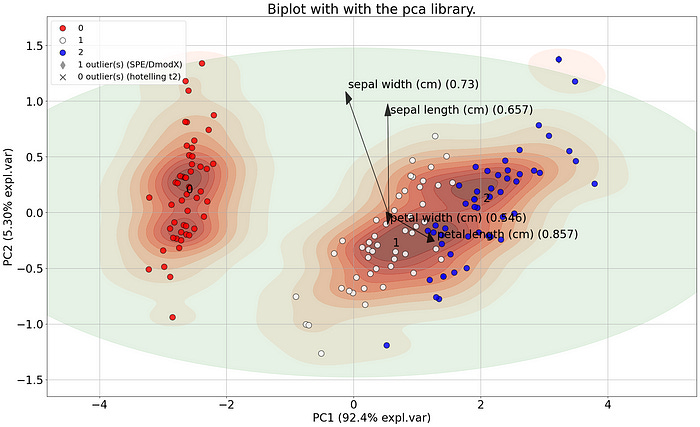

Principal Component Analysis is the most well-known technique for (big) data analysis. However, interpretation of the variance in the low-dimensional space can remain challenging. Understanding the loadings and interpreting the biplot is a must-know part for anyone who uses PCA. Here I will explain i) how to interpret the loadings for in-depth insights to (visually) explain the variance in your data, ii) how to select the most informative features, iii) how to create insightful plots, and finally how to detect outliers. The theoretical background will be backed by a practical hands-on guide for getting the most out of your data with pca.

What Are PCA Loadings And How To Effectively Use Biplots?

A practical guide for getting the most out of Principal Component Analysis.

Dec 27, 2024

∙ Paid

Causal Discovery

Learn the core concepts of machine learning, causal discovery, and data visualization through clear, hands-on Python examples. Master both theory and practice to apply these techniques confidently in real-world scenarios!

Learn the core concepts of machine learning, causal discovery, and data visualization through clear, hands-on Python examples. Master both theory and practice to apply these techniques confidently in real-world scenarios!Listen on

Substack App

RSS Feed

Recent Episodes