Understanding your data is an important step in data science. However, if you don’t make any assumptions about the data, it can become a time-consuming and challenging step. It is easy to neglect this step and jump too soon into the water. Understanding the multicollinearity in the data set can make the difference between an unsuccessful project or finishing successfully!

One manner to determine multicollinearity in the data set is using the Hypergeometric Test. By applying multiple tests between the variables, the relationships across variables can be visualized as a network. Noteably, steps such as one-hot encoding, and multiple test corrections need to be performed to gain reliable results. This entire approach is readily implemented in a method named HNet [1,2,3]. It stands for Graphical Hypergeometric Networks and allows you to easily explore your data on multicollinearity and retrieve new meaningful insights. In this article, I will discuss the importance of data understanding followed by a practical example.

Data understanding is a crucial step.

Real-world data often contains measurements with both continuous and discrete values. We need to look at each variable and do a philosophical analysis about their meaning and importance for the specific problem. But it is more than only looking at one variable at the time (univariate), the time-consuming part kicks in hard if no assumptions are made on the data and the variables are examined in a multivariate manner. The search space becomes super-exponential in the number of variables. Skip it? No! Learn to swim! It is utterly important to thoroughly explore the data before making any analysis [4].

Data exploration will give hints and clues for the tasks ahead.

Based on the hints and clues you can decide which models are candidates for the analysis, and what the chances are to retrieve reasonable results. Despite the availability of many libraries, datasets require intensive pre-processing steps, and exploratory analysis is a complex and time-consuming task.

Here comes HNet into play which uses statistical tests to determine the significant relationships across variables. The cool part is, you can simply input your raw unstructured data in the model. The output is a network that can shed light on the complex relationships across variables.

Pre-processing vs. Exploring

Data exploration usually goes hand in hand with the pre-processing step. But there are differences:

Pre-processing is among others about:

Normalizing, standardizing, scaling.

Encoding variables.

Cleaning such as removal or imputation.

Exploring to understand is among others about:

How are the relationships between variables?

Does the data make sense?

Is there any bias?

What is the underlying data distribution?

Univariate/multivariate analysis

The exploratory analysis can be quite challenging because there is no real question to pursue. This is the part where you need to get feeling with the data. One way to get “feeling” with the data is by understanding the relationships between variables.

HNet: Hypergeometric Networks.

Let’s jump into the methodology of HNet. The aim is to determine a network with significant associations that can shed light on the complex relationships across variables. The input can range from generic data frames to nested data structures with lists, missing values, and enumerations.

HNet learns associations from data sets with mixed data types and with unknown function.

This means that datasets that contain features such as categorical, Boolean, and/or continuous values can be used. In addition, you do not need to specify a target (or response) variable as it will work in an unsupervised manner.

The architecture of HNet

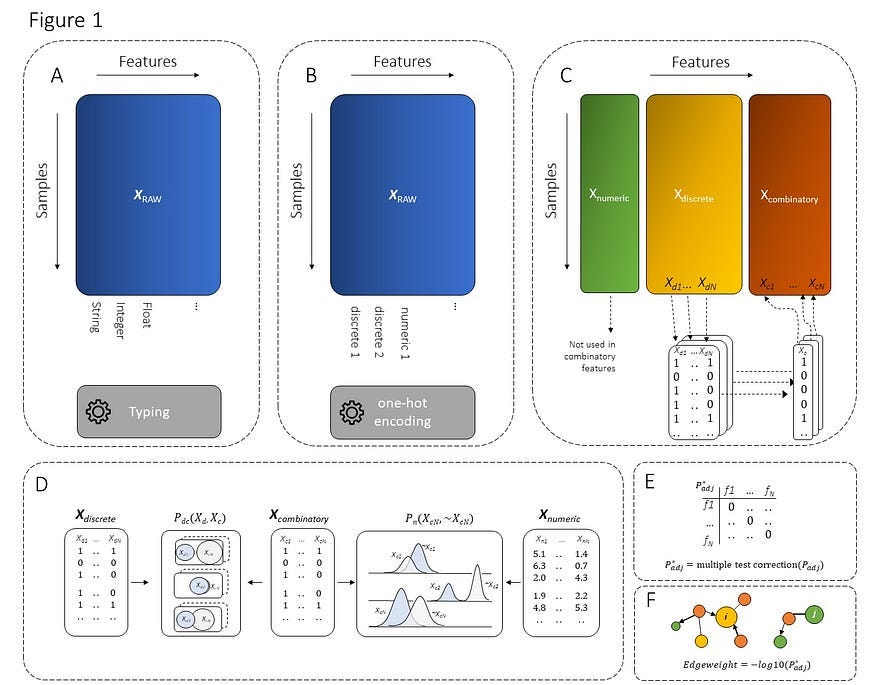

The architecture of HNet is a multi-step process (figure 1) for which the first two steps are the processing of the raw unstructured data through typing and one-hot encoding.

Typing: The first step in the process is to type each feature as categoric, numeric, or exclude. The typing is either user-defined or automatically determined. In the latter case, features are set to numerical if values are floating type or have more than a minimum number of unique elements (e.g., if the number of unique elements >20% of the total non-missing).

One-hot Encoding: The second step is encoding the categoric values into a one-hot dense array. This is done using df2onehot library. The one-hot dense array is subsequently for statistical inference.

Statistical inference: This step contains two statistical tests. 1. The one-hot encoded features are tested for significance with all other one-hot encoded features (Xdiscrete) using the hypergeometric test. 2. To assess significance between the numeric features (Xnumeric) in relation to the dense array (Xdiscrete), the Mann-Whitney-U test is used. Each numeric vector is split on the categoric feature (Xci versus ~Xci) and tested for significance.

Multiple test correction: After the statistical inference step, all tested edge probabilities between pairs of vertices, either categoric-categoric or categoric-numeric, are stored in the adjacency matrix (Padj), and are corrected for multiple testing. The default Multiple Test Method is set to Holm (Figure 1E). Optional are various other False Discovery Rate (FDR) or Familywise error rate (FWER) methods.

Filtering on significant edges: The last step (Figure 1F) is declaring significance for edges. An edge is called significant under alpha is 0.05 (default). The edge-weight is computed by the -log10(Padj).

The final output is an adjacency matrix that contains nodes and edge weights that depicts the strength of pairs of vertices. The adjacency matrix is examined in the next step as a network representation or heatmap.

Compute Multicollinearity.

When we talk about multicollinearity, It means that certain values of one variable tend to co-occur with certain values of the other variable. From a statistical point of view, there are many measures of association (such as Chi-square test, Fisher exact test, Hypergeometric test) and are often used where one or both of the variables is either ordinal or nominal. I will demonstrate by example how the Hypergeometric test can be used to analyze whether two variables of the Titanic dataset are associated. It is readily known in this dataset that the sex status (female) is a good predictor for survival. For more information and univariate analysis, see Kaggle [5] and blogs [6]. Let’s compute the association between survived and female.

Listen to this episode with a 7-day free trial

Subscribe to Causal Data Science to listen to this post and get 7 days of free access to the full post archives.